$4.4 Trillion, Model Collapse, The Beatles, AI Prompts, and EU Regulation

This week’s update:

Here’s this week’s news, product applications, and broader philosophical implications. So here we go:

💰 $4.4 trillion

🧮 Model Collapse

🛒 Online Shopping

🎵 The Beatles

✒️ Prompting

🇪🇺 AI Act

Latest News and Updates

The economic potential of generative AI: The next productivity frontier

A new report released by McKinsey this week suggests that AI could add $4.4 trillion dollars of value to the global economy each year going forward. It could automate 70% of some jobs, mostly among knowledge workers.

Generative AI’s impact on productivity could add trillions of dollars in value to the global economy. Our latest research estimates that generative AI could add the equivalent of $2.6 trillion to $4.4 trillion annually across the 63 use cases we analyzed—by comparison, the United Kingdom’s entire GDP in 2021 was $3.1 trillion. This would increase the impact of all artificial intelligence by 15 to 40 percent. This estimate would roughly double if we include the impact of embedding generative AI into software that is currently used for other tasks beyond those use cases.

The AI feedback loop: Researchers warn of ‘model collapse’ as AI trains on AI-generated content

We’re only 6 months into our new age of generative AI, but already we’re seeing what could be a huge problem: model collapse. Most of what AI models have been trained on until now has been human-produced content. But with so much AI content being generated, we’re seeing more and more of that being included in AI model training. And the models don’t do well when trained on generative AI content.

Now, as more people use AI to produce and publish content, an obvious question arises: What happens as AI-generated content proliferates around the internet, and AI models begin to train on it, instead of on primarily human-generated content?

A group of researchers from the UK and Canada have looked into this very problem and recently published a paper on their work in the open access journal arXiv. What they found is worrisome for current generative AI technology and its future: “We find that use of model-generated content in training causes irreversible defects in the resulting models.”

Google’s New AI Tool Is About to Make Online Shopping Even Easier

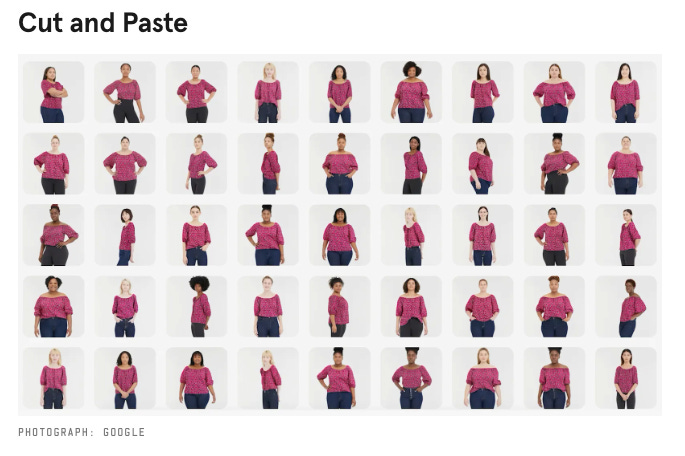

Imagine having an avatar of yourself that could try on clothes from all sorts of brands and help you decide what will look good and what you want to buy? That’s what Google is creating. Shopping for clothes online has always been more difficult than other goods, so this will be an interesting improvement.

Now, customers in the United States can virtually “try on” women’s tops. The company uses images of real models ranging from XXS to 3XL to wear AI-generated versions of clothes from hundreds of brands sold across Google, like Anthropologie, Everlane, and H&M. You can scroll through and select different body types or skin tones and see how clothes might drape on your own body. When you find the model that most closely resembles you, you can save them to be your default model.

Paul McCartney says AI tools helped rescue John Lennon vocals for ‘last Beatles record’

It’s not just living artists who will have AI create new music, but any musician.

According to McCartney, technology developed for the recent Beatles documentary Get Back was able to extract former bandmate John Lennon’s vocals from a low-quality cassette recording in order to create the foundation for the track.

Useful Tools & Resources

This week I explored tools to help with AI Prompting. This is a broad topic, so we’ll have more to come. I’ll be creating a library of these tools soon, so remember to check back for everything soon.

PromptPal

PromptPal let’s you search through all different types of prompts, for GPT, Dall-E, Midjourney, etc. It also has specific categories like Product Management prompts, which I’ll have to try more.

But if you are looking for specific ideas on prompts, this will get you a good start.

PromptBase

PromptBase is a site where you can create prompts yourself, buy prompts that others have created, and sell one that have worked well. If you don’t want to take the time to learn prompting, this seems like a good place to get what you need quickly. And if you are good at prompts, a place to see them.

HuggingFace

This one has some interesting potential. It didn’t do what I had envisioned after I used one of the prompts, but this is why we test lots of things, right?

Noonshot

I really liked Noonshot for Midjourney prompting. It gives you lots of guidance for what to add. Again, not at all what I anticipated, but that probably speaks to my need for more time in Midjourney.

Deep Dive - EU Regulation

The European Union has been leading the way (generally) when it comes to regulation and protecting users on the internet and with new technology. And this week has moved forward with significant steps in regulating AI with the AI Act.

According to the NY Times:

The European Parliament, a main legislative branch of the European Union, passed a draft law known as the A.I. Act, which would put new restrictions on what are seen as the technology’s riskiest uses. It would severely curtail uses of facial recognition software, while requiring makers of A.I. systems like the ChatGPT chatbot to disclose more about the data used to create their programs.

The EU has traditionally been at the forefront of thinking about these issues.

The European Union is further along than the United States and other large Western governments in regulating A.I. The 27-nation bloc has debated the topic for more than two years, and the issue took on new urgency after last year’s release of ChatGPT, which intensified concerns about the technology’s potential effects on employment and society.

Being very thoughtful about how to protect people while fostering innovation is a difficult balance. According to the European Parliament:

Parliament’s priority is to make sure that AI systems used in the EU are safe, transparent, traceable, non-discriminatory and environmentally friendly. AI systems should be overseen by people, rather than by automation, to prevent harmful outcomes.

Wisely, the EU is segmenting AI into different categories:

Unacceptable Risk

High Risk

Limited Risk

Minimal/No Risk

But regulation isn’t easy. First, governments don’t move quickly while technology, and especially AI, has been moving faster than anything we’ve ever seen.

According to the Brookings Institute:

To keep the corporate AI race from becoming reckless requires the establishment and development of rules and the enforcement of legal guardrails. Dealing with the velocity of AI-driven change, however, can outstrip the federal government’s existing expertise and authority. The regulatory statutes and structures available to the government today were built on industrial era assumptions that have already been outpaced by the first decades of the digital platform era. Existing rules are insufficiently agile to deal with the velocity of AI development.

And like the EU has been thinking about, we have to consider what to regulate vs. what not to (or what to regulate differently).

Because AI is a multi-faceted capability, “one-size-fits all” regulation will over-regulate in some instances and under-regulate in others. The use of AI in a video game, for instance, has a different effect—and should be treated differently—from AI that could threaten the security of critical infrastructure or endanger human beings. AI regulation, thus, must be risk-based and targeted.

Additionally, should applications be regulated, or the actual development of AI? According to WSJ:

Tech companies and their lobbyists argue that any government-enforced rules should focus on specific AI applications—and not put too many restrictions on how AI is developed, as is being proposed in Europe. They say such an approach would impede innovation.

And who gets to regulate? Too often, companies get to regulate themselves. Sometimes that works, other times it doesn’t. And while the US is traditionally in the lead when it comes to creating technology, the EU has typically created the frameworks for regulation, especially since it moves earlier and more comprehensively.

Once again it appears as though the EU, which has been in the lead in establishing digital platform policy with its Digital Markets Act and Digital Services Act, is also in the lead on establishing AI policy. On June 14 the European Parliament overwhelmingly approved the AI Act. Following its adoption, the regulatory machinery of the European Commission will begin developing enforceable policies.

We certainly need to think carefully about the use of AI and its regulation. How do we continue to benefit from advances in AI while managing the risks and impacts to humans. This is just the beginning.